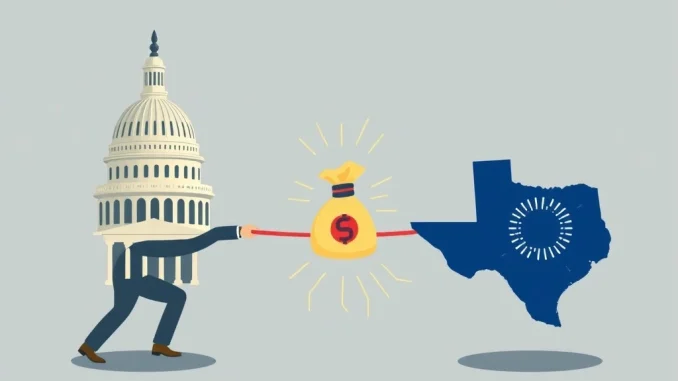

In a move that has sent ripples across the technology landscape and beyond, the U.S. government has unveiled a significant shift in its approach to artificial intelligence. This new directive threatens to withhold federal AI funding from states that implement AI regulations deemed overly “burdensome.” For anyone following the rapid advancements in AI, and particularly those interested in how government policies can shape technological development and even impact the broader economic environment, this is a pivotal moment. This bold step signals a heightened federal focus on streamlining AI innovation and minimizing bureaucratic hurdles, but it also raises critical questions about state autonomy and the future of AI governance.

The Looming Threat to State Autonomy and Federal AI Funding

At the heart of this new directive is the White House AI Action Plan, released under the Trump administration. This plan mandates federal agencies to meticulously scrutinize state-level AI laws. The core tenet? Federal grants could be withheld from jurisdictions where existing or proposed rules are perceived to “weaken federal support” or “waste these funds.” While the plan acknowledges the possibility of “thoughtfully crafted legislation” that doesn’t “unduly restrict innovation,” it clearly establishes a framework designed to pressure states into aligning with federal priorities regarding AI development.

What does this mean in practical terms? Federal agencies will now evaluate state AI policies before awarding grants. This gives them the authority to reduce or even deny funds if they identify perceived regulatory conflicts. Furthermore, the Federal Communications Commission (FCC) has been tasked with assessing whether state-level AI laws infringe upon its established regulatory jurisdiction. This approach isn’t entirely new; it mirrors legislative proposals seen in Congress, such as the contentious “Big Beautiful Bill” that aimed to ban state AI rules for a decade, and Senator Ted Cruz’s initiative, which explicitly links federal funding to states rolling back stringent AI regulations. The clear message is that the federal government intends to assert its influence over the fragmented landscape of AI governance.

Navigating the Complexities of AI Regulation

The policy’s broad strokes, while aiming for clarity, have instead introduced significant ambiguity, particularly around what precisely constitutes a “burdensome” rule. This lack of precise definition has immediately sparked concerns among legal experts, state governments, and industry observers about potential legal disputes and compliance challenges. Critics argue that this policy disproportionately favors large tech firms, potentially at the expense of public interests. Sarah Myers West of the AI Now Institute succinctly put it, stating that the policy prioritizes “corporate interests over the needs of everyday people.”

The vagueness extends to practical implementation. Grace Gedye of Consumer Reports highlighted the uncertainties surrounding which specific federal funds are at risk and how states are expected to adapt to such undefined guidelines. Similarly, Forrester analyst Alla Valente pointed out that terms like “ideological bias” and “burdensome regulations” remain undefined, further complicating the implementation process for both federal agencies and state governments. This creates a challenging environment where states must re-interpret existing laws without clear federal guidelines, potentially leading to a “lurch” as Mashable observed.

The implications of this regulatory uncertainty are significant. States that have already invested time and resources into developing their own AI governance frameworks may find themselves at odds with federal mandates, potentially leading to a patchwork of legal challenges and a chilling effect on localized innovation. The delicate balance between fostering innovation and ensuring public safety and ethical AI development becomes even more precarious when the rules of engagement are unclear.

Shaping the US AI Strategy: Innovation vs. Oversight

Beyond regulatory threats, the administration’s overarching US AI strategy includes several key pillars designed to accelerate AI development and maintain technological leadership. One notable revision is to the National Institute of Standards and Technology (NIST) AI Risk Management Framework. Controversially, this revision removed references to critical areas such as misinformation, climate change, and diversity, equity, and inclusion. This change has drawn criticism, with some arguing that it could inadvertently introduce bias into AI models by excluding crucial data sources.

Infrastructure and export strategies also form integral parts of this policy. The plan seeks to expedite permitting for crucial AI data centers and semiconductor facilities, even proposing exemptions under the National Environmental Policy Act (NEPA) for projects deemed to have minimal environmental impact. This aggressive push aims to remove bottlenecks in the physical infrastructure necessary for AI growth. Concurrently, the policy enforces stringent export controls on semiconductor subsystems, a strategic move to protect U.S. technological leadership in a highly competitive global arena.

Industry groups have offered mixed reactions. The National Association of Manufacturers, for instance, praised the regulatory reforms, seeing them as a positive step towards reducing red tape. However, critics continue to voice concerns that the exclusion of specific data sources from the NIST framework could lead to unintended biases in AI development, potentially undermining the very goals of fairness and inclusivity that AI aims to achieve.

The Debate Over AI Policy and Ideological Neutrality

A particularly contentious aspect of the new AI policy is its emphasis on ideological neutrality in AI models. While seemingly benign, this focus has sparked significant debate. Georgetown University’s Bonnie Montano cautioned that excluding contested data might inadvertently conflict with the plan’s stated goals of fairness and inclusivity. The challenge lies in defining “ideological neutrality” in a way that doesn’t inadvertently censor or sideline diverse perspectives, which are crucial for developing robust and unbiased AI systems.

The push for ideological neutrality could have profound implications for how AI models are trained and deployed, particularly in areas like content moderation, information retrieval, and even scientific research. If the definition of “neutrality” is too narrow, it could stifle the development of AI that truly reflects the complexities of human society. This delicate balance between preventing bias and ensuring comprehensive data inclusion is a central challenge for future AI policy development.

Driving AI Innovation While Balancing Ethical Concerns

Ultimately, the administration’s strategy reflects broader ambitions to position the U.S. as a global leader in AI innovation. The policy aims to accelerate research, development, and deployment of AI technologies by removing perceived regulatory barriers and bolstering critical infrastructure. The idea is that by reducing the regulatory burden on states and streamlining federal processes, the U.S. can outpace competitors in the global AI race.

However, the success of this strategy hinges on its ability to balance these innovation incentives with robust ethical safeguards. While expediting AI development is a clear goal, the potential for unintended consequences – such as algorithmic bias, privacy infringements, or the misuse of powerful AI tools – remains a significant concern. The policy’s ambiguity around key terms and its revisions to established frameworks have led many stakeholders to question whether the pursuit of speed might come at the cost of crucial ethical considerations.

It’s worth noting that the plan reportedly incorporated over 10,000 public comments, according to a BBC report, underscoring the contentious and complex nature of AI regulation. The policy’s rollout also coincides with President Trump’s international efforts, including relaxed export limits on Nvidia and AMD chips to China and new infrastructure deals in Gulf countries, signaling a comprehensive global strategy for U.S. technological leadership.

As federal agencies begin the complex process of implementation, all eyes will be on how states adapt to these new pressures and whether the administration provides clearer definitions for regulatory compliance. The outcome of this policy will undoubtedly shape the future of AI development in the United States, influencing everything from economic competitiveness to the ethical frameworks governing this transformative technology.

Frequently Asked Questions (FAQs)

Q1: What is the core threat of the new U.S. AI policy to states?

The core threat is that the U.S. government will withhold federal funding from states that implement AI regulations deemed “burdensome” or those that could “weaken federal support” for AI innovation. This aims to pressure states to align with federal AI policy priorities.

Q2: Which federal agencies are involved in implementing this AI policy?

Federal agencies, in general, are mandated to scrutinize state AI laws before awarding grants. Specifically, the Federal Communications Commission (FCC) is tasked with assessing whether state-level AI laws infringe on its regulatory jurisdiction.

Q3: What are some of the criticisms leveled against this new AI regulation approach?

Critics argue that the policy favors large tech firms over public interests, with concerns about vague definitions of “burdensome” rules and “ideological bias.” There are also concerns that revisions to the NIST AI Risk Management Framework, removing references to misinformation and DEI, could inadvertently introduce bias into AI models.

Q4: How does this policy aim to boost U.S. AI innovation?

The policy aims to boost AI innovation by reducing perceived regulatory hurdles for states, expediting permitting for AI data centers and semiconductor facilities (including potential NEPA exemptions), and enforcing export controls on semiconductor subsystems to protect U.S. technological leadership.

Q5: What is the significance of the revisions to the NIST AI Risk Management Framework?

The revisions to the NIST AI Risk Management Framework removed references to misinformation, climate change, and diversity, equity, and inclusion. This has sparked debate, with critics worrying that excluding these crucial areas could lead to biased AI models and conflict with goals of fairness and inclusivity.

Q6: How might states react to the threat of withheld federal AI funding?

States face pressure to reinterpret existing laws and adapt their AI regulatory frameworks to align with federal priorities. The ambiguity of the guidelines could lead to compliance challenges, legal disputes, and a cautious approach to new state-level AI regulations to avoid losing crucial federal funds.